Demystifying AI Agents: Understanding Their Capabilities Without Overestimating Agency

Share This Story, Choose Your Platform!

As the advent of AI agents becomes increasingly imminent, it’s essential to clarify what they represent and what they do not. Despite the groundbreaking advancements in large language models (LLMs) powered by deep learning, it’s crucial to distinguish between their sophisticated capabilities and the concept of agency.

The Architectural Essence of LLMs

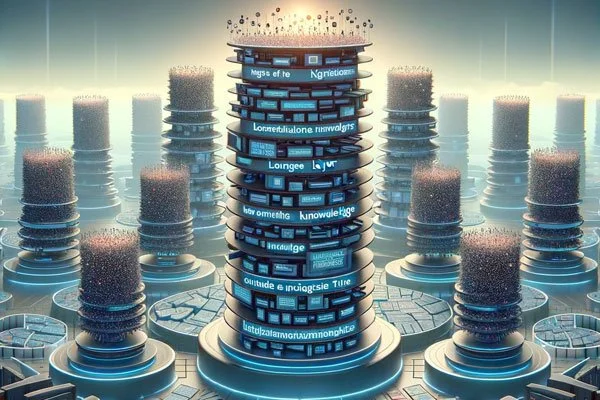

At the core of LLMs lies a novel approach to deep learning, which can be metaphorically visualized as a forest of knowledge towers. Each tower represents a center of knowledge, constructed layer by layer, to form a deep understanding. The true innovation of LLMs lies in their ability to interact with multiple knowledge towers simultaneously, merging their insights to produce comprehensive outputs. This ability to synthesize information across various domains gives LLMs an appearance of intelligence beyond the traditional, narrow applications of deep learning. However, it’s imperative to understand that this does not equate to agency. LLMs operate by reorganizing and integrating information provided by humans, lacking any form of consciousness or self-driven intent.

The Role of Stochastic Gradient Descent

Central to the operation of LLMs is the use of stochastic gradient descent, an optimization algorithm that plays a critical role in refining model parameters to enhance the alignment between predicted and actual outcomes. This process can be likened to an antiviral system, acting as a filter that refines rather than proliferates information—a stark contrast to the amplifying nature of social media platforms.

The Creation of New Knowledge Towers

When LLMs are presented with new queries, they traverse their forest of knowledge towers, amalgamating existing information to “create” a new tower. It’s important to note that while these new towers are innovative, they are inherently limited by the information contained within the existing towers. Although there is potential for these models to generate novel outcomes, akin to significant discoveries like “Google’s Geometry” solution, such advancements would require additional logic systems, pointing towards a “hybrid AI” future.

Agency Within AI: A Critical Examination

The question of agency in AI—defined as a constant, self-aware monitoring of activities—remains unanswered. Current AI systems, including LLMs, do not possess agency. They operate more like sophisticated, metabolic activities running in the background, complex beyond any technology previously developed but not sentient or autonomous.

The Ethical Landscape of AI Use

As we navigate the implications of AI agents, it’s vital to recalibrate the conversation towards human responsibility. The potential for AI to “break bad” is not a concern rooted in the technology itself but in how humans might misuse it. The tool is neutral; the accountability lies with the users. This perspective underscores the importance of framing discussions about AI within a human context, emphasizing that the responsibility for ethical use rests on our shoulders.

Conclusion

In summary, while AI agents, particularly LLMs, represent a significant leap forward in our ability to process and synthesize information, they do not embody agency. Understanding their capabilities within the correct context is essential to harnessing their potential responsibly. As we continue to develop and interact with these technologies, maintaining a human-centric dialogue about their use and impact is paramount.

For more information on Large Language Models please visit: https://arxiv.org/abs/2401.03428

Get Started Now

Ready to integrate Nexigen into your IT and cybersecurity framework?

Schedule a 30-minute consultation with our expert team

Breathe. You’ve got IT under control.

Ready to integrate Nexigen into your IT and cybersecurity framework?

Refine services and add-ons to finalize your predictable, no-waste plan

Complete the form below, and we’ll be in touch to schedule a free assessment.